Visual Paradigm Desktop |

Visual Paradigm Desktop |  Visual Paradigm Online

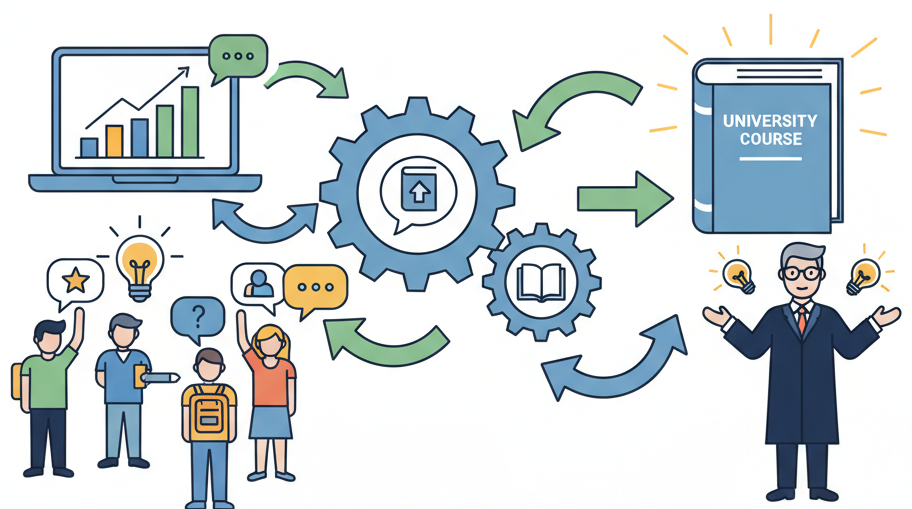

Visual Paradigm OnlineA university professor and teaching assistants analyzed end-of-semester student feedback to make impactful, evidence-based improvements to a large undergraduate course, boosting student satisfaction and learning outcomes.

The course had over 200 students, and the anonymous end-of-semester surveys generated a huge volume of qualitative feedback. The teaching team found it difficult to move beyond their own general impressions and identify specific, actionable changes that would genuinely improve the student experience for the next cohort.

The professor collected all the anonymous, open-ended feedback from the official university survey system. The comments related to lecture clarity, assignment difficulty, TA sessions, and the final exam. These were all compiled into a single, lengthy document, representing the raw voice of the students.

The goal, set in the “Prepare” step of the planner, was academic and student-focused: “Analyze student feedback to identify 2-3 key areas for course revision for the next semester.” The planning team consisted of the professor and two TAs, ensuring that both the high-level pedagogical strategy and the on-the-ground student interactions were represented.

The “Conduct” plan outlined a collaborative 2-hour meeting. Using a shared Google Doc as a simple digital whiteboard, the team would read through the feedback document together. They would copy and paste each distinct piece of feedback under thematic headings that they would create as they went along, such as “Lecture Pace,” “Assignment Feedback,” and “Quiz Structure.”

In the ‘Analyze’ step, they planned to first look at the sheer size of the thematic groups to see where the bulk of the comments lay. For the largest ones, they would then have a pedagogical discussion about the effort required to make a change (e.g., redesigning a single assignment vs. re-recording a whole lecture series) to find the most efficient yet impactful improvements.

For “Implement,” the plan was to create a “Course Changes for Next Semester” summary document based on their findings. Crucially, they planned to share this with the next cohort of students during the first lecture to demonstrate that their feedback was valued and acted upon. The primary metric would be comparing the quantitative survey scores year-over-year.

Following the plan from the report, the team made a surprising discovery. While they had been worried about their lecture content, the affinity map in the Google Doc showed the largest group of negative comments was about the ‘perceived unfairness and high stress of the weekly quizzes.’ Another large theme was ‘difficulty connecting abstract lecture concepts to the practical assignments.’ Armed with this clear data, they decided to change the quizzes to a ‘best 8 out of 10’ grading model to reduce stress and to add a short ‘how this connects’ section at the end of each lecture.

The Affinity Mapping Planner provided a structured way to systematically process student voices, ensuring that course improvements were targeted at what mattered most to the learners. The changes addressed student stress and comprehension directly, rather than being based on the instructors’ assumptions.